Whether training a deep learning model or rendering a complex scene, scaling your workload over two GPUs can significantly decrease the time taken over just sticking with one.

A preferred approach for most readers would be to insert two GPUs into a regular consumer system with sufficient expansion slots. After all, most of the recent motherboards have enough PCIe lanes to support at least eight lanes per GPU.

However, you may have noticed that only a few GPUs support multi-GPU technologies like Nvidia’s SLI or newer NVLink. Also, support for SLI/NVLink is becoming more exclusive to more expensive GPUs. For context, here is a list of GPUs that support SLI/NVLink:

| Budget | Midrange | Premium | Prosumer |

|---|---|---|---|

| – | – | – | RTX 3090 |

| – | RTX 2070 SUPER | RTX 2080 Ti RTX 2080 SUPER | RTX Titan |

| – | GTX 1070 | GTX 1080 Ti GTX 1080 | Titan X |

| GTX 960 GTX 950 | GeForce GTX 970 | GTX 980 Ti GTX 980 | GTX TITAN X |

You would quickly notice how support slowly drops for GPUs on a lower budget. A plausible reason for this could be the increase in performance of lower-tier hardware to the point that a single GPU is more than enough for most workloads.

Can you use two different graphics cards in one computer?

The good news is that unless you want to combine the processing and VRAM resources of the two graphics cards instead of using them separately in parallel, there is no need for having identical GPUs and multi-GPU tech like NVLink or SLI.

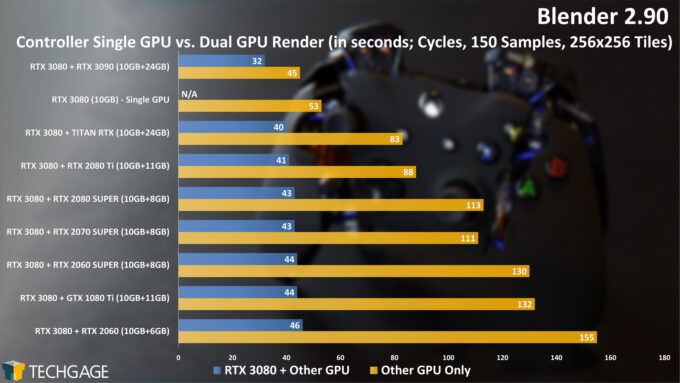

However, you must balance the workload among your GPUs to complete rendering simultaneously. A slower GPU can leave a faster one waiting, increasing the overall render time.

If your workload can overcome this limitation, you can use two GPUs from different generations. This makes upgrades easier since you now use your old GPU with a new one. You can technically run both Nvidia and AMD GPUs in the same system, though we would recommend against it due to differences in their architecture.

Another possibility would be using two lower-priced GPUs instead of a more expensive option. You can enjoy more processing power in applications like rendering thanks to more CUDA/Stream processors and RT cores while paying the same price.

When to use two graphics cards with SLI:

With most creative and AI workflows being optimized for multiple separate GPUs, you may ask: When do I need SLI?

Although SLI was widely popular in the GTX 10-series cards, Nvidia replaced it with the similar but more powerful NVLink from their workstation lineup, first debuting in the Turing generation of Nvidia cards.

NVLink works on a similar principle to the PCIe interface on your motherboard. However, unlike the PCIe interface, GPUs connected via NVLink need not pass through the CPU to access each other’s resources. NVLink also has a much higher bandwidth.

VRAM is the deciding factor for a majority of workloads. Parallelization mainly occurs by loading identical copies of the data to be rendered onto each GPU’s VRAM. Then, each GPU will process a part of the workload (half in the case of two GPUs), and the output data will be combined into the final result.

The only option for GPUs with insufficient VRAM would be to split the initial data onto multiple GPUs. Each GPU will still need to access the other’s VRAM, leading to the necessity of a high-bandwidth interface like NVLink. In such scenarios, a GPU with more VRAM is the better option.

Think of a theoretical workload that needs the GPUs to communicate with each other in real time throughout the process, and you’ve got a use for NVLink.

An example would be rendering a complex scene that requires a large amount of VRAM. Using NVLink lets you pool the VRAM of both GPUs to accommodate the associated scene data and render it with the combined processing power of both GPUs’ CUDA, RT, and Tensor cores.

On the flip side, for deep learning models, if your model can easily fit into a single GPU’s memory, you can use multiple GPUs without NVLink since the data transfer between GPUs is minimal enough to be accommodated via PCIe.

We hope you found this article helpful. Please feel free to share it with your friends and colleagues. You can also ask around in the comments and leave any suggestions or points that we left out.

Comments